Various international regulations underline that humans must stay in the discussion making process, as algorithms cannot be held responsible. Nevertheless, also if the final decider is human, the AI may subconsciously non-adequately influence.

Relevant AI biases can include the over-trust in the machine, where humans perceive the machine as being on a higher knowledge level and acting flawlessly. As a consequence, results coming from an algorithm do not get adequately checked and challenged.

Machine-like, the human decider confirms the AI result. To comply with regulations, algorithms do not make decisions, but filter information, rank suggestions and/or show various risk flags.

Such signs could be understood as yellow flags as known from sports. Less as the yellow cards in football (or soccer), as this is less a risk advisor but a kind of lighter punishment, but in Formula 1, as here a yellow flag indicates caution on the track.

When a yellow flag is displayed, drivers must slow down, be prepared to stop if necessary, and not overtake other cars until they have passed the hazard zone.

Yellow flags are typically shown when there is a hazard or obstruction on or near the track, such as a crash, debris, or a car in a dangerous position. This helps ensure the safety of drivers, marshals, and other personnel on the circuit.

If a driver fails to adhere to the yellow flag rules, they may face penalties from race stewards.

The yellow flag can include red stripes, what warns about a slipper track surface. It’s usually waved by marshals to alert drivers of hazardous conditions, such as oil spills, water on the track, or debris, which could make the racing surface treacherous and reduce grip.

When drivers see this flag, they’re expected to exercise caution and adjust their driving accordingly to avoid accidents. Even the way, the flag gets waived has a meaning, as the doubled waved yellow flag is a more serious warning sign.

Seeing these different types of yellow flags requires the driver to slow down, to understand what caused the warning; and then to act adequately. Strategies can include staying out on the track or coming into the pits to change tires or let other small adaptations be done.

In opposite to this, the red flag means that the training, qualification, or race had been stopped. This is not because of punishment, but to protect the drivers or other stakeholders, like track marshals.

Like Formula 1, employees using AI tools must understand risk scores or other symbols as warning flags. This shall not automatically lead to disqualification of options or confirmation of the option with the lowest indicated risk, but humans have the responsibility to consider this, then execute an adequate extensive decision-making process, which could confirm the AI’s consideration, but also come to a different conclusion.

Humans are ethically and legally responsible for their decisions, if they are not up to this, the result could be the black flag: disqualification.

Patrick Henz is the Head of Governance, Risk & Compliance at a leading engineering and plant construction company. In this role, he drives an effective GRC system that holistically combines these subjects with integrity, respect, passion, and sustainability. His responsibilities include Business Resilience and Community Engagement. He actively promotes the idea of a holistic sustainability strategy, where GRC plays a key role, at university workshops and conferences.

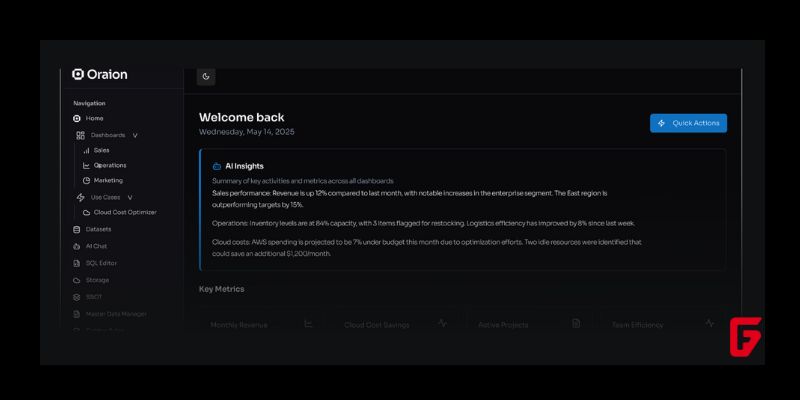

Irish AI startup Oraion has raised €2.9 million in pre-seed funding to expand into the U.S. market and accelerate development of its enterprise automation platform.

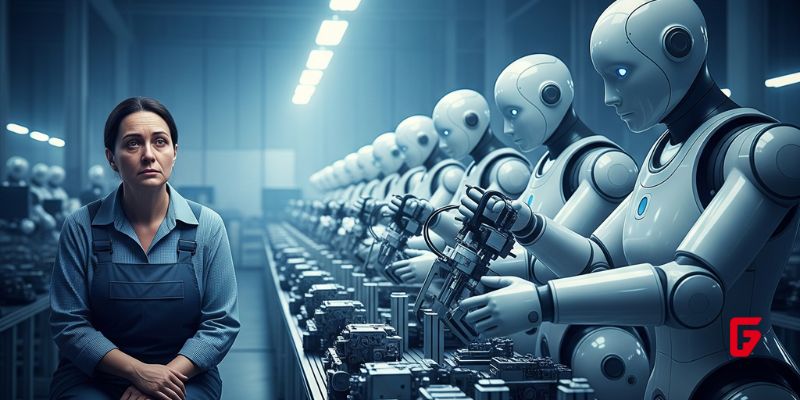

Discover how rapid AI advancements may soon replace traditional jobs, disrupt global economies, and drive the need for new income models like UBI.

xAI’s Grok 4 is redefining artificial intelligence with breakthrough reasoning, top benchmark scores, and new multimodal capabilities—outperforming industry leaders.

Discover how Deepinvent’s AI Innovator compresses R&D from years to minutes, empowering innovators to create breakthrough inventions and accelerate progress across industries.

Coca-Cola’s AI-driven demand forecasting pilot achieved a 7-8% sales surge by combining historical data, weather, and geolocation for smarter inventory decisions. Learn how this tech is reshaping retail.

Perplexity launches Comet, an AI-powered browser that reimagines online browsing with advanced automation, privacy, and a built-in assistant for smarter web interactions.

Levelpath secures $55 million in Series B funding to scale its AI-native procurement platform, driving intelligent automation and efficiency for enterprises.

Explore the rise of embodied AI as it merges robotics and intelligence, transforming industries and redefining how machines interact with our world.

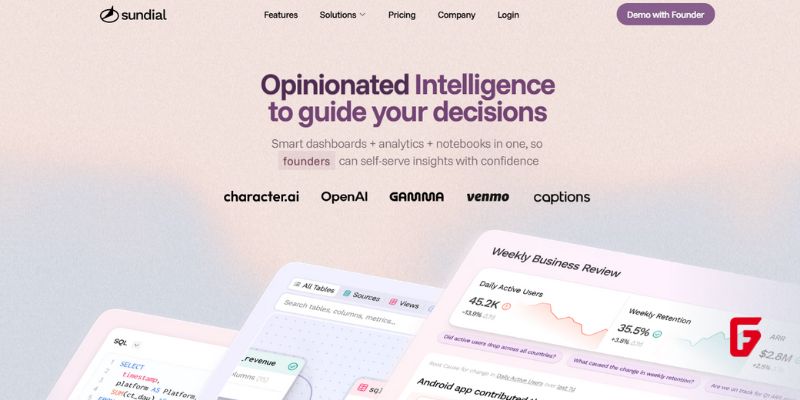

Sundial has raised $16M in Series A funding to accelerate its AI-powered analytics platform, empowering organizations to make faster, data-driven decisions.

SiPearl has secured €130 million to accelerate the development of sovereign AI chips, reinforcing the European Union’s drive for technological independence.

Perplexity is reshaping online search and browsing with AI-powered answers, real-time trusted sources, and a revolutionary browser experience. Explore the future of digital discovery in 2025.

Synfini has raised $8.9M to boost its AI-powered chemistry automation, increasing total funding to $53M and advancing innovation in automated drug discovery.

futureTEKnow is focused on identifying and promoting creators, disruptors and innovators, and serving as a vital resource for those interested in the latest advancements in technology.

© 2025 All Rights Reserved.

To provide the best experiences, we use technologies like cookies to store and/or access device information. Consenting to these technologies will allow us to process data such as browsing behavior or unique IDs on this site. Thanks for visiting futureTEKnow.